Home

What is it?

- The home page is where users can get started interacting with NeuralSeek and quickly set up a chatbot in a matter of moments (see NeuralSeek onboarding).

Why is it important?

- The home page of NeuralSeek is a crucial feature for swift chatbot deployment. Users can efficiently set up their virtual agents by providing organization details, connecting to their KnowledgeBase, and selecting a preferred Large Language Model. The option to configure organization details, tune documentation parameters, and auto-generate actions streamlines the process. Additionally, NeuralSeek addresses a common challenge by offering automated question generation based on the KnowledgeBase content, saving time and improving interaction quality. The ability to input, categorize, and review questions, along with uploading test questions for analytics, makes it an indispensable tool for users aiming to create effective and responsive virtual agents in a matter of moments.

How does it work?

- Basics: User provides general information about their organization with NeuralSeek.

- Data: Where users connect to their KnowledgeBase (can also be done under the “Configure” tab).

- LLM: (only available with Bring Your Own LLM plan) Users can select their preferred LLM (Large Language Model) of choice. Users are required to enter the appropriate integration information (e.g. API key of their LLM) in order to continue.

- About: Describing the organization and use case preferences.

- Tune: Provide information about the documentation/KnowledgeBase.

- Q&A: Auto-generate a list of actions to set up a virtual agent in minutes.

User can also perform the following actions through the home page:

- Auto-generate questions: Virtual Agents would typically require an adequate number of questions for creating meaningful intentions and dialogs. This process would typically involve having to manually create them. However, NeuralSeek can generate, based on the contents of your KnowledgeBase, a good set of commonly asked questions for you.

- Manually Input questions: If you already have a good set of questions that your user frequently asks, you can upload them into NeuralSeek and NeuralSeek could categorize and create their answers for you to later review and curate them.

- Upload Test questions: If you want to test out your set of questions, and validate whether their coverage or confidence score is good enough, or find out how their analytics look like, use this. A template CSV file is given for you to use it.

Configure

What is it?

- The Configure tab allows users to modify settings for NeuralSeek features.

Why is it important?

- This functionality allows for a highly customizable and adaptable user experience, enabling organizations to optimize the performance of NeuralSeek in accordance with their unique use cases. Whether it involves adjusting default configurations for standard use or delving into advanced configurations for more nuanced preferences, the "Configure" tab empowers users to fine-tune NeuralSeek's capabilities. This level of customization ensures that NeuralSeek becomes a versatile and effective tool, capable of delivering optimal results across diverse organizational contexts.

How does it work?

- For more information refer to our Reference Material - Configuration section.

Integrate

What is it?

- The Integrate tab provides users with detailed instruction on integration of NeuralSeek with selected Virtual Agents, WebHook, API, or self-hosted LLM.

Why is it important?

- NeuralSeek provides comprehensive guidance on selected integrations which allows for a more user-friendly experience.

How does it work?

- The Integrate tab on NeuralSeek's user interface provides step-by-step instructions on how to connect to various virtual agent frameworks. Once connected, users are able to call on NeuralSeek through the chosen framework as either a "fallback intent" or other action.

- Custom Extension: This contains the information to build a custom NeuralSeek extension within Watson Assistant.

- LexV2 Lambda: Use AWS Lambda to send user input that routes the Lex FallbackIntent to NeuralSeek. Used in conjunction with AWS LexV2.

- LexV2 Logs: How to enable Round-Trip Logging using LexV2 Logs, to monitor the usage of curated intents. The purpose of round-trip logging is to improve the virtual agent’s performance by analyzing the data and identifying areas for improvement.

- Watson Logs: How to enable Round-Trip Logging using Watson Logs, to monitor the usage of curated intents. The purpose of round-trip logging is to improve the virtual agent’s performance by analyzing the data and identifying areas for improvement.

- WebHook: This is the backbone of NeuralSeek, how users connect and communicate with the solution. One can make a call to this WebHook from any application (e.g. slack, servicenow, etc.) that can forward its question to it and receive answers from.

- API (REST): Where to find necessary information regarding how to invoke NeuralSeek’s REST API, and navigate and test it right on its openAPI generated page. You can get examples of JSON message requests and responses, as well as JSON schema of the message payloads.

- KoreAI: Activate Round-Trip monitoring for deployed NeuralSeek Intents. This feature enables NeuralSeek to continuously monitor the usage of its curated intents through KoreAI event Tasks. It will promptly alert you if any curated intents require updates due to changes in the associated KnowledgeBase documents.

- Console API: This integration allows users to access debugging and monitoring features conveniently from within the NeuralSeek application, simplifying tasks such as error identification, performance analysis, and data insights without the need to switch between different tools or interfaces. It enhances the user experience by providing seamless access to the Console API's functionality within NeuralSeek's interface, streamlining development and monitoring tasks

Extract

What is it?

- Extract lets users extract detected

entitiesfound inside a user provided text. The interface let's users enter texts, and from there it will automatically try to extract found entities and provide the list. You can also add, update, or delete any number ofcustom entitiesif you want to better specify certain entities, or create a new type of entity. For more information, see entity extraction.

Why is it important?

- This feature is integral for efficient entity extraction from user-provided text. Its user-friendly interface simplifies the process, allowing users to input text seamlessly and receive an automatic extraction of entities, presented in a comprehensive list. The added functionality of custom entities further enhances precision, enabling users to refine entity specifications or introduce new types. This flexibility is crucial for tailoring the extraction process to specific needs, ensuring accuracy, and accommodating diverse use cases. The ability to add, update, or delete custom entities reflects the adaptability of the tool, making it a valuable asset for tasks requiring nuanced entity recognition and management.

How does it work?

- Users will input text and click the 'Extract' button next to the text box. Below, users will be able to see relevant information automatically extracted and matched to NeuralSeek's current system entities, without having to pre-specify. By defining custom entities, users are able to streamline extraction to their specifications.

mAIstro

What is it?

- The mAIstro feature is a versatile and innovative platform, offering an open-ended playground for "retrieval augmented generation". It empowers users to seamlessly integrate their preferred Language Model (LLM), select from a range of data sources including Knowledge Bases, websites, local files, or typed text, and employ the NeuralSeek Template Language (NTL) markup for dynamic content retrieval. Notably, mAIstro enhances data by incorporating features like summarization, stopwords removal, and keyword extraction, all while providing expert guidance with LLM prompt syntax and base weighting. With the ability to output results to an editor or directly to a Word document, mAIstro delivers a powerful and user-friendly experience, making it a standout feature in content generation and retrieval.

Why is it important?

- Efficient Content Retrieval: mAIstro simplifies the process of accessing and retrieving content from various sources. This efficiency is crucial for anyone who relies on accurate and relevant information.

- Enhanced Data Quality: mAIstro enhances data quality by providing tools for summarization, stopwords removal, and keyword extraction. This ensures that the retrieved content is refined, concise, and tailored to the user's needs, saving time and effort in manual data preprocessing.

- User-Friendly Interface: mAIstro offers a user-friendly interface that makes interacting with Language Models and crafting dynamic prompts accessible to a broader audience. This accessibility is vital for individuals who may not have advanced technical skills but still require the benefits of advanced language models.

- Expert Guidance: mAIstro provides users with expert guidance by pre-configuring LLM prompt parameters and model-specific base weights. This guidance helps users achieve optimal results without the need for in-depth knowledge of language model intricacies.

- Output Flexibility: The ability to output results to an editor or directly to a Word document enhances flexibility and convenience for users, allowing them to seamlessly integrate the generated content into their workflows.

- Semantic Scoring: The incorporation of a Semantic Scoring model allows users to assess the relevance and alignment of generated content with their specific requirements. This feature adds a layer of precision and control to the content generation process.

How does it work?

- mAIstro streamlines the interaction with Language Models, making it accessible and user-friendly while providing powerful tools for content retrieval and enhancement. Users can seamlessly integrate retrieved content into their workflows with precision and control, making it a valuable asset for various professional fields.

- For more information refer to our Reference Material sections: mAIstro Visual Editor and mAIstro Functions and NTL.

Seek

What is it?

- NeuralSeek’s Seek feature enables users to test questions and generate answers using content from their connected KnowledgeBase. To ensure transparency between the sources and answers, NeuralSeek highlights where the answers are coming from within the KnowledgeBase. Semantic match scores are employed to compare the generated responses with the original documentation, providing a clear understanding of the alignment between the response and the meaning conveyed in source documents. This process ensures accuracy and instills confidence in the reliability of the responses generated by NeuralSeek.

Why is it important?

- This feature empowers users to obtain precise and well-contextualized answers by allowing users to pose queries and generate relevant responses using information extracted from the linked documentation. The emphasis on transparency is a key strength, with NeuralSeek highlighting the specific sources within the KnowledgeBase to enhance accountability and traceability of information. The incorporation of semantic match scores adds an extra layer of assurance. This process not only guarantees accuracy but also instills confidence in the reliability of the answers provided by NeuralSeek, making it an invaluable tool for users seeking trustworthy and well-supported information.

How does it work?

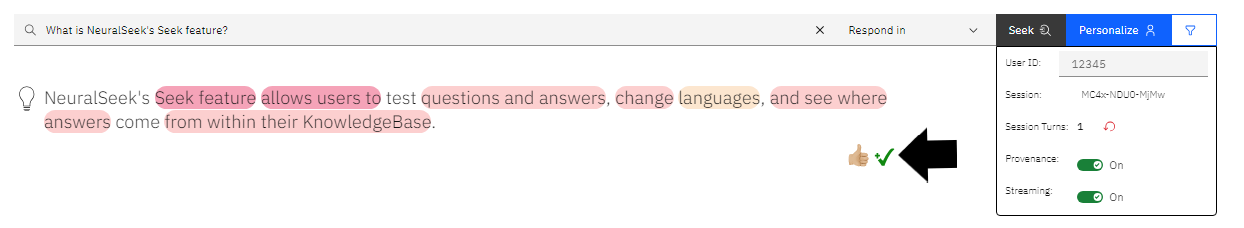

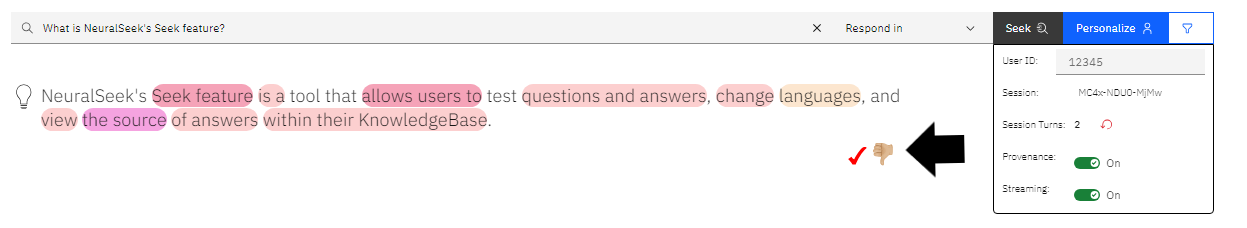

- Users begin by inputting a query, defining the language of the query, and then clicking the 'Seek' button. A relevant answer will automatically generate below for the user to review. Other features on the page include:

- User ID: Users are able to view and set a User ID to test conversations.

- Session ID: A unique "Session ID" number is generated. Users are able to revert to a new session with a unique "Session ID" number by clicking the red arrow next to "Session Turns".

- Session Turns: A "Session Turns" number is generated, which allows the user to view how many turns were generated in the corresponding Session ID.

- Highlight Answer Provenance: Enabling this feature reveals how the majority of responses are formed by the trained answer and additional components that came in from the KnowledgeBase itself. Users are able to enable or disable.

- Answer Streaming: Streaming is available to enable or disable. Enabling this feature allows for the response to be generated word-by-word. Disabling this feature allows for the whole response to be generated at once.

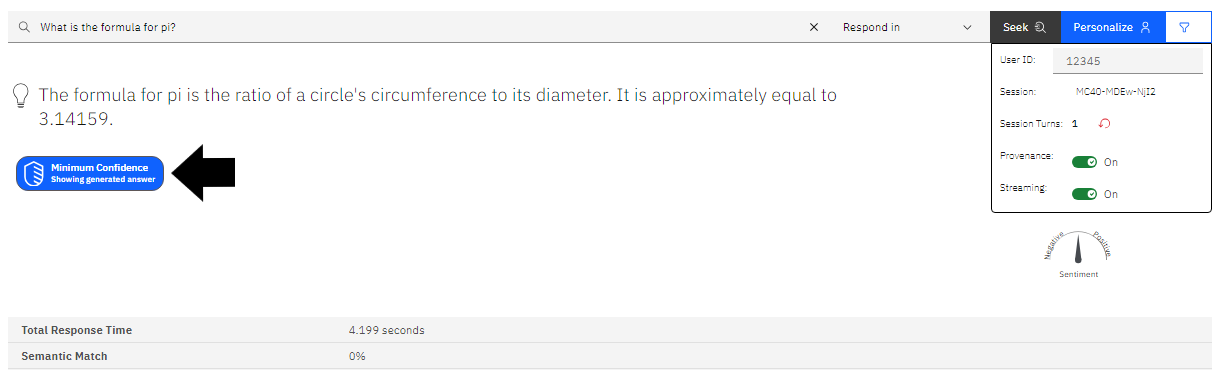

| Information Output | Description |

|---|---|

| Total Response Time | This number indicates the total amount of time for a response to generate in seconds. |

| Semantic Match % | This percentage is the overall match score that indicates how much NeuralSeek believes that the responses are well aligned with the underlying ground truth from the KnowledgeBase. The higher the percentage is, the more accurate and relevant the answer is based on the truth. |

| Semantic Analysis | A summary describing why NeuralSeek calculated the matching score in an easy-to-understand syntax. This gives users a good understanding why the answer was given either a high or low score. |

| KnowledgeBase Confidence % | This percentage indicates how confident the KnowledgeBase thinks the retrieved sources are related to the given question. |

| KnowledgeBase Coverage % | This percentage indicates how much coverage the KnowledgeBase thinks the retrieved sources are related to the given question. |

| KnowledgeBase Response Time | This number indicates the amount of time for the KnowledgeBase to generate a response in seconds. |

| KnowledgeBase Results | This number indicates the amount of retrieved sources the KnowledgeBase thinks are related to the given question. |

Other Uses

Users are able to provide feedback on answers by clicking the "Thumbs Up" or "Thumbs Down" icons.

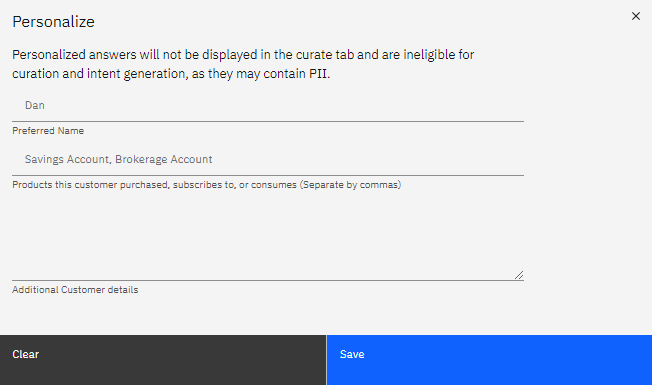

Users can personalize and filter through their KnowledgeBase documents on the Seek tab as well.

Users are able to see what an answer would have been when using the "minimum confidence" icon on Seek's with low semantic match scores.

For more information, see Semantic Analytics.

Curate

What is it?

- NeuralSeek's Curate features allows users to view intents generated from the KnowledgeBase, import and export intents into the virtual agent, and manage example questions and answers. The content and parameters of each 'Intent' can be adapted and adjusted to accommodate employee and customer needs.

- Users can also view the results of other features as well, such as round trip logging, merge/unmerge actions, whether the intent contains any Personally Identifiable Information (P.I.I.), and whether the source KnowledgeBase information has changed so that users can easily detect whether the answers that were generated needs to be updated or not.

- Sometimes, it is easier to curate all the questions and answers outside of NeuralSeek, and upload them in batch. Use the Curate feature to upload and update the curated Q&A's (supports CSV format). A template CSV file is given for you to use it.

Why is it important?

- This feature enhances the user experience by providing a streamlined and accessible interface. Additionally, it enables users to closely monitor incoming queries and the corresponding generated answers, allowing for a better understanding of user interactions. Users are able to easily identify outdated information within the connected documentation through the displayed coverage and confidence scores, facilitated by the built-in semantic scoring model. Furthermore, the ability to adjust answers and parameters ensures customization to better align with user queries and intents. Lastly, the feature allows users to view and modify auto-generated queries for each intent, providing a comprehensive toolkit for refining and optimizing responses. Overall, these functionalities collectively contribute to a more effective and tailored knowledge management experience.

How does it work?

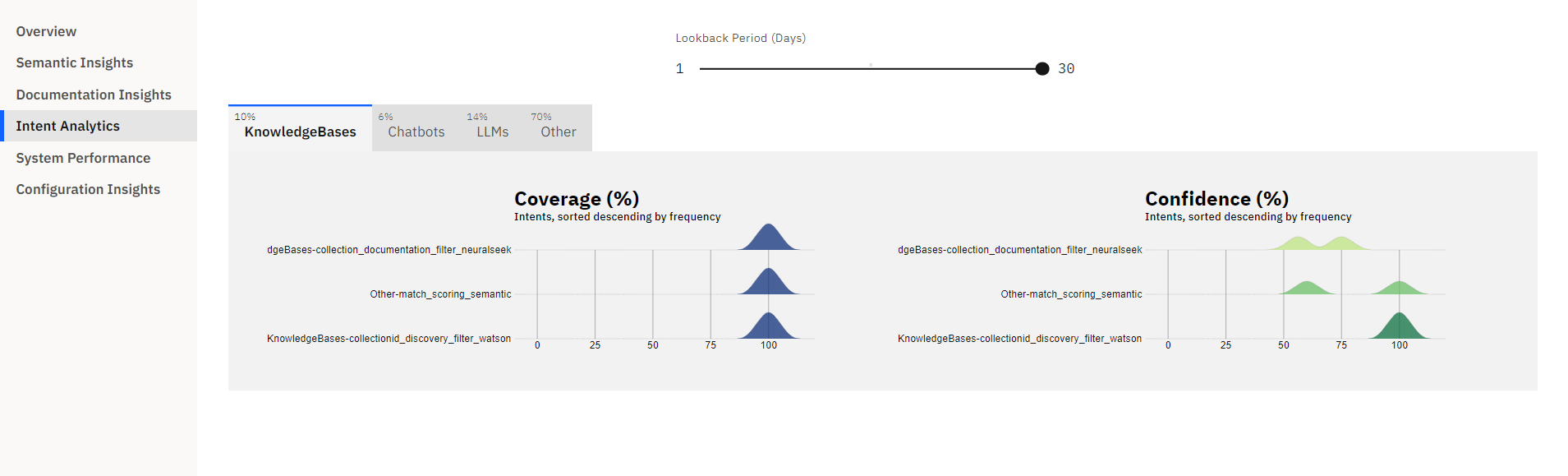

- The Curate feature in NeuralSeek's UI allows users to manage intents and answers efficiently. Accessed through the Curate tab, the UI comprises columns like Intent, displaying categorized questions with indicators for status; Q&A, indicating the number of questions and answers per intent; Coverage %, showing KnowledgeBase contribution; and Confidence %, reflecting the likelihood of user satisfaction. The trend graphs use color codes for coverage and confidence states. Users can hover over the graph to observe changes over time. Intents and answers can be displayed, searched, and filtered based on various criteria. Users can edit, delete, or backup answers, and perform operations on intents, such as merging or renaming. Caution is advised for irreversible actions like deletion and merging.

- See Curation of Answers for more info.

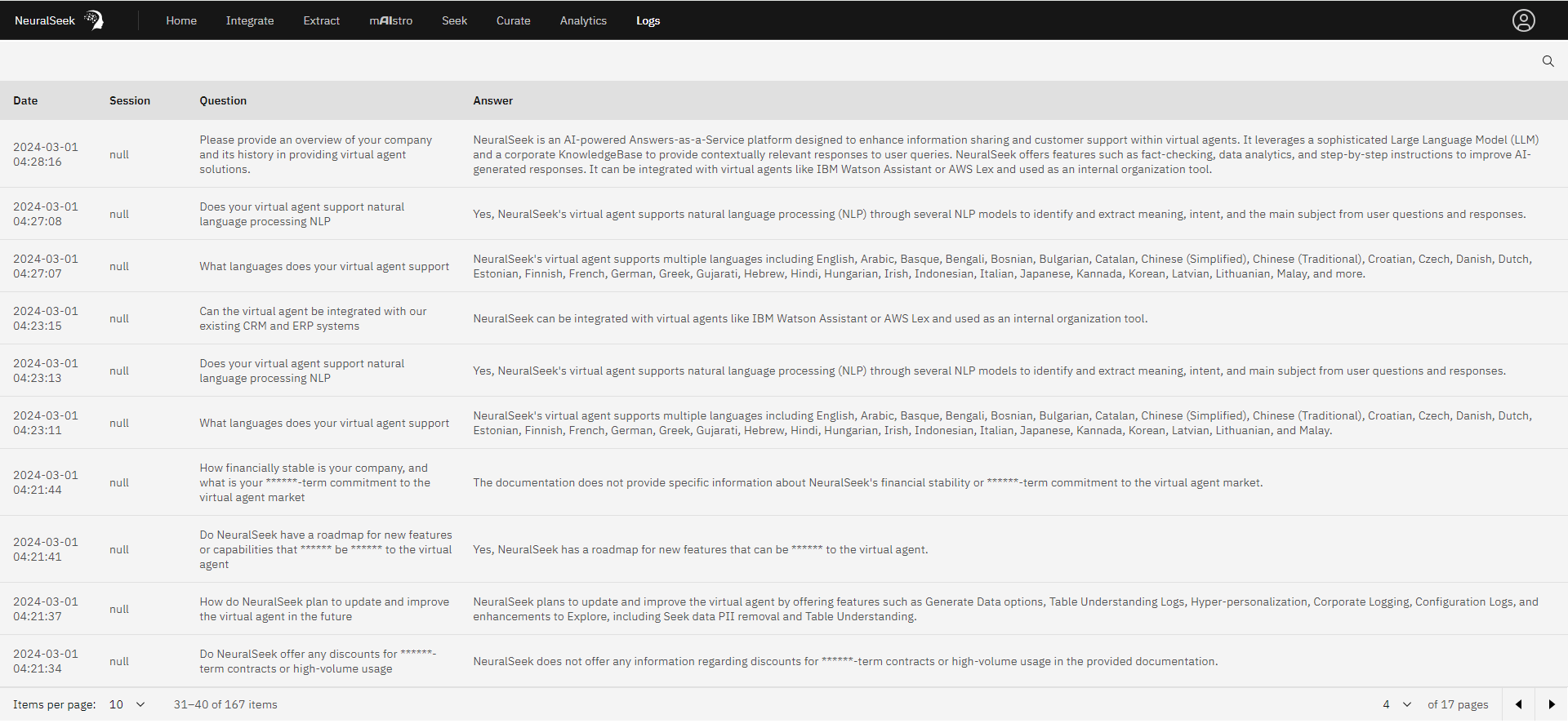

Logs

What is it?

- In NeuralSeek, users can access the usage log generated from interactions with the Seek feature through the Logs tab. This feature allows users to efficiently filter their log history by date, session ID, question, and answer for a more streamlined and informative experience.

Why is it important?

- This feature empowers users to analyze and track user interactions with the Seek feature, offering valuable insights into system performance. The efficient filtering options enhance the usability of the log, providing a streamlined experience. This functionality is important for troubleshooting, understanding user behavior, and making informed decisions to improve the overall efficiency and effectiveness of the Seek feature within NeuralSeek.

How does it work?

- Users are able to view the history of questions and answers, search for specifics using the magnifying glass icon, or filter by date, session ID, question, and answer using the arrows provided.

Governance

What is it?

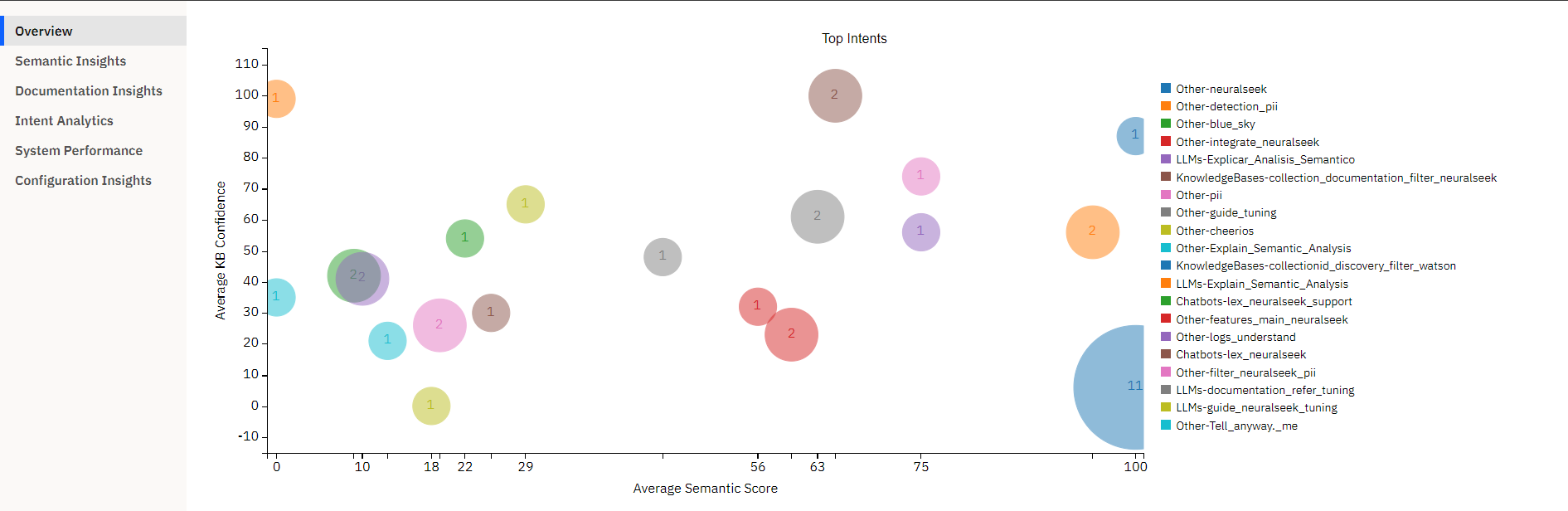

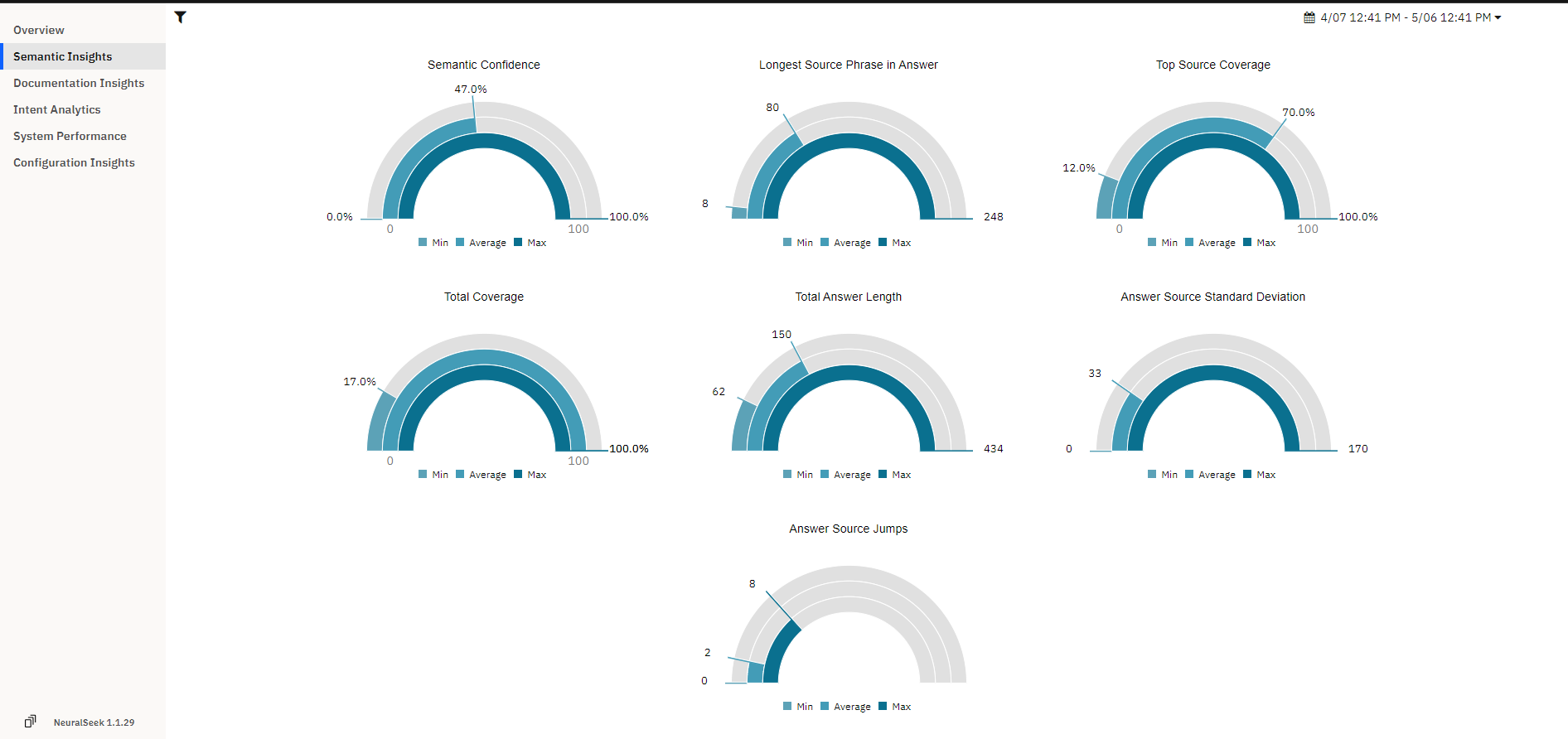

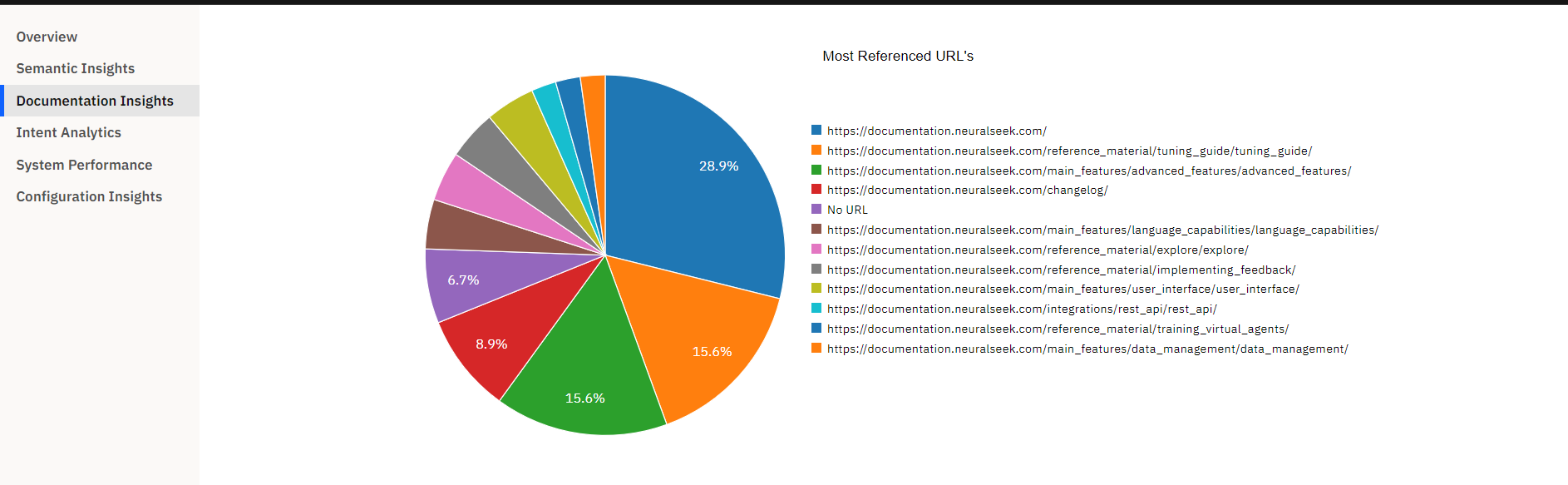

- The Governance tab is a comprehensive tool designed to provide users with a holistic view of Retrieval Augenmented Generation (RAG) governance. It serves as a centralized platform where users can access various insights and metrics related to the governance of their NeuralSeek system.

Why is it important?

- NeuralSeek's Governance ensures the effective management and oversight of NeuralSeek systems. With features like semantic insights, documentation insights, intent analytics, system performance, and configuration insights, users gain valuable information to make informed decisions about their NeuralSeek instance. This level of transparency and control is essential for maintaining the integrity and efficiency of NeuralSeek processes.

How does it work?

-

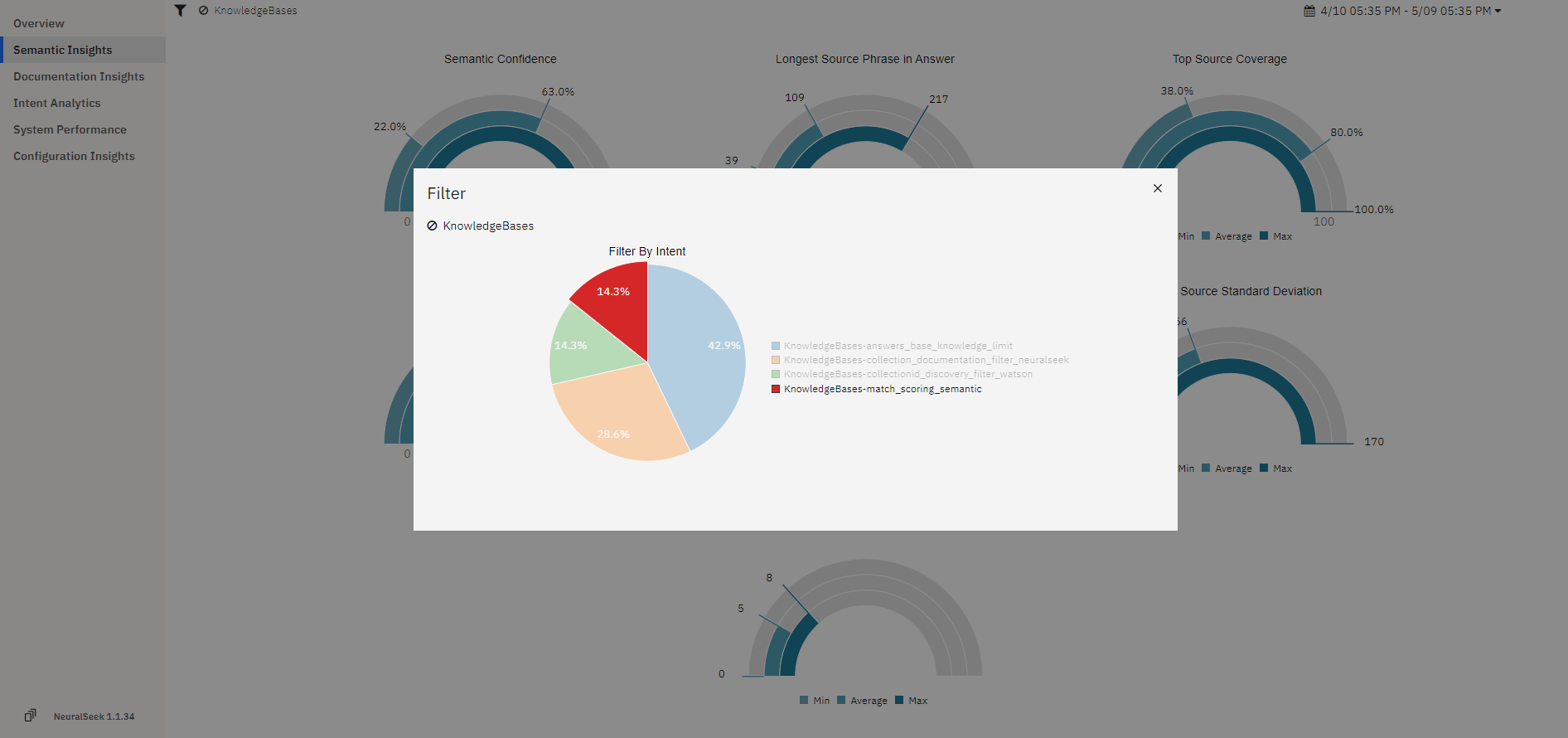

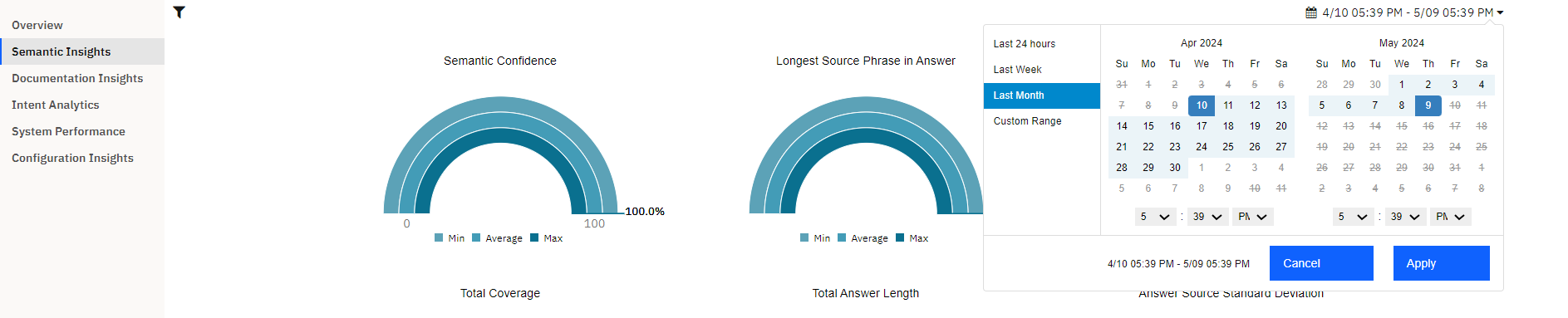

The Governance tab operates by aggregating and analyzing data from various sources within the NeuralSeek platform. By consolidating these insights in one accessible interface, NeuralSeek's Governance tab empowers users to make well-informed decisions regarding their NeuralSeek governance strategies. Additionally, the Goverance tab's dyanmic interface allows users to filter by intent, category, or date for a more specified scope of internal analytics.

-

Semantic insights provide users with a deeper understanding of the underlying meaning and context of generated content. This allows for users to monitor the quality of the answers being generated.

- Documentation insights offer visibility into the sources and references used by NeuralSeek so users are able to track what documentation is frequently used and how it is performing.

- Intent analytics help users understand what intents are trending, and how they are performing. The Governance tab allows for easy tracking of both model and document regression over time.

- System performance metrics track the efficiency and reliability of your NeuralSeek instance.

- Configuration insights allow users to monitor configuration changes and track churn over time for optimal performance.

- Filtering in Governance allows user to narrow or broaden the scope of the analytics to their specification. Users can filter by category and intent by clicking the filter icon in the top left corner. Users are able to filter by date by clicking the date range in the top right corner, or by clicking and dragging over a specified date range in the System Performance charts.